Abstract

This article explains how modern AI drafts contracts using retrieval-augmented generation (RAG) combined with large language models (LLMs). We introduce what LLMs like GPT-4 are and how they function (tokens, context windows, and weight parameters), highlighting how newer models improve upon older ones. We then define RAG and distinguish an AI model’s built-in “memory” from external legal knowledge bases.

A detailed overview is given of how a custom system (such as that used by British Contracts) leverages thousands of precedent documents and practice notes to ground contract drafting.

We break down how the AI interprets a user’s prompt and generates a contract draft, utilising both its trained neural knowledge and retrieved reference material. The probabilistic nature of text generation (guided by model weights and learned probabilities) is demystified in a practical legal context.

We discuss current limitations of AI contract drafting as of mid-2025, including hallucinations, context length constraints, and interpretive ambiguities, and why these shortcomings mean that expert human review by a specialist solicitor remains essential.

Introduction

Artificial intelligence is rapidly transforming how legal documents are prepared. AI contract drafting tools are emerging that can produce first-draft contracts or clauses on demand. These tools often rely on large language models (LLMs), powerful text generation systems trained on vast amounts of language data, combined with retrieval-augmented generation (RAG) techniques that let the AI draw from a library of legal documents. The result is an AI that can draft documents in a style resembling a seasoned lawyer by leveraging both its general language proficiency and specific legal knowledge sources.

This article provides a comprehensive overview of how such AI-driven contract drafting works. We will explain, in accessible terms, the technology behind LLMs like GPT-4 and why they represent a leap over older models. We will then discuss RAG and how it addresses some limitations of standalone AI models by incorporating up-to-date legal information from external databases. Using the example of British Contracts’ custom AI system, we illustrate how thousands of precedent contracts and practice notes can be used to “ground” the AI’s responses in reliable legal text. We then walk through how the AI interprets a user’s prompt and generates a contract, shedding light on the role of model weights and probabilities in guiding the drafting process. Crucially, we examine the current limitations of AI-generated contracts, such as hallucinations (fabricated content), loss of context in long documents, or ambiguities in interpretation, which persist even as of June 2025. Finally, we emphasise why, despite the impressive capabilities of AI, a human lawyer’s review remains an indispensable part of the process, ensuring that the drafted contract is both legally sound and tailored to the client’s needs.

Throughout this discussion, the aim is to demystify the mechanics of AI contract drafting. All examples and discussions are based on the context of English law and practice. By the end, the reader should have a clear understanding of how an AI like the one at British Contracts drafts a contract and, equally important, where the AI’s role ends and the human lawyer’s expertise must take over.

Understanding Large Language Models (LLMs)

At the heart of AI contract drafting is the large language model itself. An LLM is a type of AI trained to generate human-like text by predicting what words (or more precisely, tokens) should come next in a sequence. These models are “large” in terms of the number of parameters (weights) they contain. For example, OpenAI’s GPT-3 model (released in 2020) has 175 billion parameters (learned weights) and can take into account a context window of about 2048 tokens (roughly 1,500–2,000 words) when producing text. By contrast, newer models like GPT-4 (2023) are even more advanced – OpenAI has not disclosed GPT-4’s exact size, but it is widely reported to be larger and more capable. It can handle a much larger context (up to 8,192 tokens by default, with variants supporting 32,768 tokens which is about 50 pages of text). This means GPT-4 can consider far more prior text when drafting a response than GPT-3 could, allowing it to draft longer, more complex documents without losing track of earlier details.

How LLMs function: Large language models are built using neural network architectures (most commonly the Transformer architecture) that excel at processing sequences of text. They break the input text into tokens (which may be words or sub-word pieces) and transform these through multiple layers of weighted connections. During training, an LLM adjusts its weights (parameters) by reading massive amounts of text, essentially “learning” the patterns of language. By training, the model develops an internal representation of language that encodes syntax (sentence structure), semantics (meaning), and even some real-world knowledge, all in the form of numerical weight values distributed across the network.

An LLM does not retrieve answers from a database nor follow explicit grammar rules; instead, it uses statistical patterns learned during training to generate text one token at a time. In effect, the model is pre-trained to “predict the next word” in a sequence based on the words that came before. It does this prediction using the probabilities encoded in its weights. At each step of text generation, the LLM looks at the current context (the prompt plus whatever text has been generated so far). It uses its billions of weighted connections to produce a probability distribution over the vocabulary for the next token. For instance, if an LLM has seen many examples of contracts, when it encounters the prompt “This Agreement is made on”, it will assign a very high probability to the token “the” as the next word (since “This Agreement is made on the [date]” is a common phrasing in contract templates). It might assign lower probabilities to other words that make less sense in that context. The model then typically selects the highest-probability token (or sometimes samples from the top few, depending on the settings) and appends it to the text before predicting the next token, and so on. This autoregressive generation (each new token prediction includes the previously generated tokens as part of the context) continues until the model produces a full, coherent answer.

Because LLMs generate text based on learned probabilities, they are incredibly adept at producing fluent and contextually appropriate language. The style and terminology of the output will mimic the patterns in the training data. For legal text, this means an LLM can output language that sounds very much like a professionally drafted contract, complete with formal tone, legal jargon, and structured clauses, as these are patterns it absorbed from statutes, case law, or contract examples in its training corpus. GPT-4, for instance, has demonstrated performance on professional benchmarks like bar exams at a level approaching human experts. This reflects not a genuine understanding or sound legal reasoning, but rather an ability to statistically reproduce the kinds of language and solutions found in legal texts it has encountered. GPT-4 vastly outperforms its predecessor GPT-3.5 in following complex instructions and producing reliable outputs on such tasks, thanks to improvements in architecture and extensive fine-tuning (including techniques like reinforcement learning from human feedback to make it better at giving helpful, truthful answers). In simpler terms, newer LLMs are more “aligned” and context-aware than older ones. They handle nuanced prompts better, make fewer blatant errors, and can work with much more information at once.

Despite these advancements, it is important to remember that even the latest LLMs operate as powerful predictive text engines. They don’t “know” facts in a human sense; their “knowledge” is encoded in probabilistic relationships between words learned from data. If an LLM was trained on a large general corpus up to 2021, for example, it might not be aware of laws or regulations enacted after that time, unless updated through further training. Moreover, sheer size and power have limits. GPT-4, while incredibly advanced, is explicitly noted to be “less capable than humans in many real‑world scenarios”. It can excel at generating text and even passing standardised tests, but it lacks the proper understanding and judgment that a human legal expert possesses. This is where retrieval techniques and human oversight become vital, as we explore next.

Retrieval-Augmented Generation (RAG): Extending Memory with Knowledge Bases

One of the key challenges in utilising LLMs for tasks such as contract drafting is ensuring that the AI has access to relevant and up-to-date knowledge. A language model’s built-in “memory”, the information it absorbed during training, is static and limited. It cannot learn new facts after training unless it’s retrained or fine-tuned, and it might not have seen niche or recent legal materials during its original training. This is where retrieval-augmented generation (RAG) comes into play. RAG is a technique that augments the LLM’s capabilities by allowing it to fetch and incorporate information from an external knowledge repository when generating an answer.

In simple terms, RAG combines an LLM with a searchable knowledge base of documents. Instead of relying solely on whatever the model “remembers” in its weights, the AI is enabled to retrieve relevant text from a specified collection of data (for example, a database of contracts or a library of legal research) and use that text to inform its output. According to the formal definition, “Retrieval-augmented generation (RAG) is a technique that enables large language models to retrieve and incorporate new information”, ensuring the model does not respond until it has consulted a set of provided documents that supplement its built-in training data. In other words, the LLM’s answer will be grounded in actual reference text from the knowledge base, rather than coming purely from the model’s trained imagination.

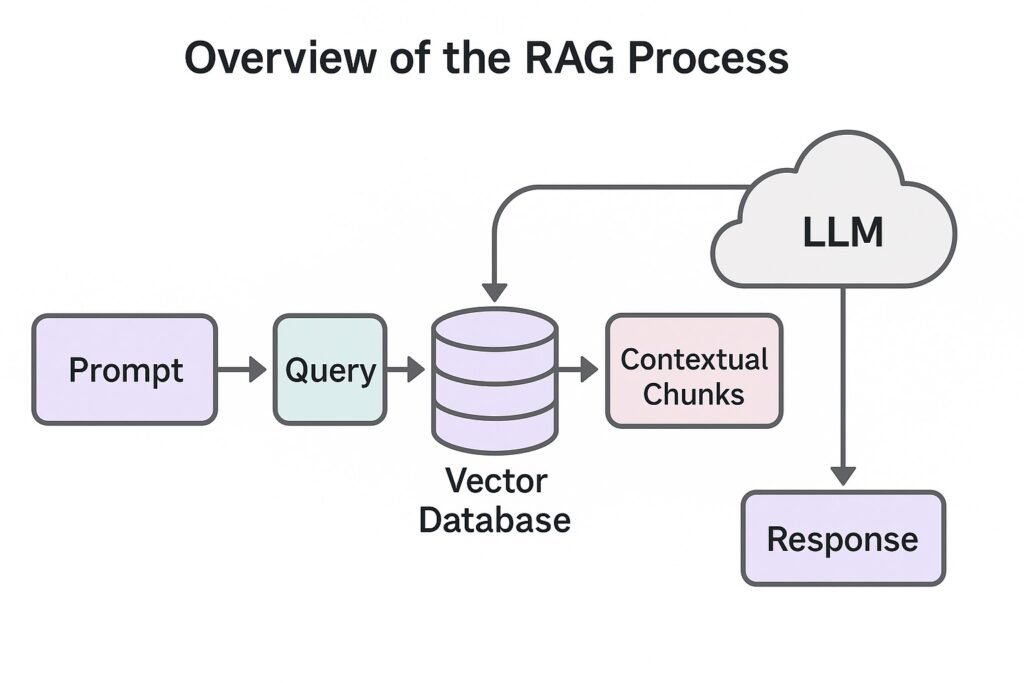

Diagram: Overview of the RAG process. RAG works by inserting an information-retrieval step before the generation step of the LLM.

The figure above illustrates the concept: the AI system takes the user’s query (prompt) and first represents it in a form suitable for searching (for instance, by creating an embedding or keyword query). It then retrieves relevant documents or text chunks from a vector database or document index that contains the external knowledge (in our case, legal precedents, clauses, commentary, etc.). These retrieved pieces of text (the contextual chunks) are then augmented onto the query. Effectively, they are attached to or included in the prompt given to the LLM. Finally, the LLM generates a response (the draft contract or clause) that is informed by both the original query and the retrieved reference information.

By requiring the model to refer to external sources, RAG addresses several issues inherent to standalone LLMs. First, it enables the model to utilise domain-specific and up-to-date information not included in its training data. For example, even if an LLM wasn’t explicitly trained on the latest 2025 changes in data protection law, a RAG system could retrieve the relevant 2025 regulation text from a knowledge base and provide it to the model, enabling the model to draft a clause consistent with the new law. Second, RAG helps reduce hallucinations and factual errors. Because the model is not generating purely from its internal memory, but is anchored by real documents, it is less likely to “make stuff up.” Ars Technica described RAG as “blending the LLM process with a web search or document lookup process to help LLMs stick to the facts.” This approach has been shown to mitigate AI hallucinations, such as chatbots inventing policies that don’t exist or recommending non-existent legal cases to support a lawyer’s argument. Such hallucinations are a known problem. A high-profile incident in 2023 involved an AI tool fabricating case citations, which went undetected by the user and led to an embarrassing court filing. With RAG, the AI would ideally pull actual case text from a trusted database instead of hallucinating a citation.

Another major benefit is that RAG decouples knowledge updates from model training. Traditional LLMs require costly re-training to update their knowledge, but with RAG, the system can be kept current simply by adding new documents to the knowledge base. As Amazon Web Services (AWS) describes, “RAG allows LLMs to retrieve relevant information from external data sources to generate more accurate and contextually relevant responses,” reducing reliance on static training data that can become outdated. When new information becomes available, rather than having to retrain the model, one can augment the model’s external knowledge base with the updated information. This makes the maintenance of an AI legal assistant far more practical in a rapidly changing field like law.

It’s worth noting that RAG is a relatively new paradigm. The term and initial technique were introduced in a 2020 research paper by Facebook (Meta), and they have quickly gained traction for AI applications in law, finance, medicine, and other areas where accuracy and up-to-date information are critical. By using RAG, an AI can act almost like a specialised research assistant. Whenever it gets a query, it first “researches” the topic in a body of relevant documents, and then formulates its answer based on that research. For contract drafting, this means the AI can retrieve actual clauses and legal wording from precedent documents and incorporate them into the draft it produces, rather than relying solely on memory.

In summary, RAG combines the fluent text generation capabilities of LLMs with the factual grounding provided by an external knowledge base. The model’s “memory” (its training) provides broad language skills and general knowledge, while the knowledge base provides specific, context-rich information on demand. The difference is akin to a law student taking an exam from memory versus an open-book exam. RAG gives the model that open book. Next, we will see how British Contracts leverages this concept by building a robust legal knowledge base for its AI, and how the drafting process incorporates those external materials step by step.

Grounding the AI in Legal Precedents and Practice Notes

For an AI system to draft useful contracts, it needs more than just generic language ability. It needs substance. Knowledge of legal terms, clause structures, and the expectations of specific contract types. British Contracts’ AI, for example, is designed to draft documents under English law, which means it must reflect the conventions and requirements of English legal drafting. To achieve this, the AI is supported by an extensive, custom-built legal knowledge base comprising thousands of documents. These include legal precedents (such as prior contract templates, model clauses, and sample agreements) and practice notes or guidelines that encapsulate expert knowledge about contract drafting.

A “precedent” in this context is essentially a template or example of a well-drafted contract or clause that lawyers trust. Law firms often have troves of precedents (e.g. a standard Non-Disclosure Agreement template, a model Shareholders’ Agreement, etc.) that drafters use as starting points. Practice notes are explanatory documents, often produced by legal publishers or firms, that outline best practices, legal requirements, and tips for drafting specific clauses (for instance, a practice note on force majeure clauses might explain what to include, along with relevant examples). By incorporating these into the AI’s knowledge base, the system gains access to authoritative, field-tested text and expert commentary when drafting.

How does this work in practice? In a RAG-enabled system, all these legal documents are indexed for retrieval. Indexing typically means converting each document (or sections of it) into a mathematical representation (an embedding vector) and storing it in a database optimised for similarity search. The British Contracts AI processes each precedent and practice note, creating embeddings that capture the semantic content of the text. For example, a confidentiality clause from a precedent and a paragraph from a practice note about confidentiality obligations would be embedded into the database. Alongside this, metadata can be stored (such as tags indicating this is a “Confidentiality clause” or “NDA template”), which can also aid retrieval.

Because the knowledge base is expanding and continuously updated, new precedents or guidance can be added over time. Suppose a new standard form is released by the Law Society or a new piece of legislation (like an update to consumer protection laws) comes into effect. The relevant documents can be added to the repository. The AI system then has them at its disposal immediately. This ensures that the AI’s contract drafting remains current with legal developments without requiring retraining of the core model. As noted earlier, this approach saves immense time and cost. RAG also reduces the need to retrain LLMs with new data, saving on both computational and financial costs, while keeping the system’s knowledge up to date.

Importantly, using a curated legal knowledge base grounds the AI in authoritative sources. When the AI drafts a contract clause, it can be instructed (via prompt engineering) to draw language from the closest precedents in the database. For instance, if asked to draft an employment contract clause on holiday entitlement, the system might retrieve a clause from a precedent Employment Contract as well as a snippet from a practice note on the Working Time Regulations. These retrieved texts provide the correct legal phrasing and information (such as “Employees are entitled to 28 days’ paid annual leave inclusive of bank holidays, in accordance with the Working Time Regulations 1998…”), which the AI can then incorporate or adapt in the new draft. The result is that the AI’s output is “grounded” in real legal text rather than being invented from scratch. This grounding greatly increases the reliability of the draft. It’s much more likely to reflect standard formulations and to include the necessary legal components, because those components are present in the source material the AI is referencing.

From a user perspective, the influence of the knowledge base might be visible in that the AI can provide citations or references to its sources. A well-designed system might display the source passages it relied on to the user. This transparency is beneficial. RAG enables LLMs to incorporate sources into their responses, allowing users to verify the cited material. In the context of contract drafting, a solicitor using the AI might get a draft clause along with footnotes pointing to the exact precedent clauses or practice notes that informed it. The solicitor can then quickly double-check those sources to ensure the AI correctly understood and applied them. This not only builds trust in the AI’s output but also accelerates the vetting process, since the solicitor knows exactly where the language came from, akin to having a trainee point to the textbook they copied a clause from.

Note that in our public-facing AI, we do not enable citations because they would refer back to documents in our RAG which users do not have access to.

In summary, a custom AI system like British Contracts’ leverages a rich, specialised legal knowledge base to augment the LLM. The knowledge base contains thousands of precedent documents (spanning various contract types and clauses) and an ever-growing collection of practice notes and legal explainers. During drafting, the AI retrieves the most relevant pieces from this trove to ground its responses in real legal language and knowledge. This approach combines the best of both worlds: the fluency and speed of an AI model, and the reliability and domain-specific accuracy of curated legal sources.

From User Prompt to Draft: How the AI Drafts a Contract

Now that we have covered the components, the LLM and the retrieval system with legal documents, let’s walk through how the AI goes from a user’s prompt to a drafted contract or clause.

This process involves several stages that can be broken down into distinct steps. Imagine a user, perhaps a lawyer or a businessperson, using the British Contracts platform. They provide a prompt describing what they need, for example: “Draft a simple consultancy agreement where a company, including standard IP and confidentiality terms, contracts a freelance IT consultant.” The AI will perform roughly the following steps to produce the draft:

- Understanding the User’s Request: The AI first interprets the user prompt. Thanks to the LLM’s training, it can comprehend natural language requests and identify the key elements. In our example, the AI recognises that the user requires a consultancy agreement involving a freelance IT consultant and a company, and that the agreement should include standard clauses regarding intellectual property (IP) and confidentiality. The LLM essentially translates this prompt into an internal representation, creating an embedding of the query that captures the details needed for drafting. Behind the scenes, the system may also reformulate or expand the prompt for better search results. This is a technique known as prompt engineering. Conceptually, it involves determining the type of contract and the necessary clauses.

- Retrieving Relevant Documents: Next, the AI’s retrieval module kicks in. Based on the interpreted query, the system searches the legal knowledge base for relevant materials. For a consultancy agreement, it will look for any precedent contracts labelled “Consultancy Agreement”. It may also search for clauses or notes relating to IP ownership in contractor scenarios and confidentiality clauses. The search could use semantic similarity, via embeddings, so that even if the prompt wording doesn’t exactly match the documents, it can find conceptually related text. For example, it may retrieve: (a) a precedent Consultancy Agreement from the database, (b) a practice note on consultant IP rights (covering who owns intellectual property created by a contractor), and (c) a sample confidentiality clause or an NDA precedent. The system selects the most relevant documents or snippets, say the top 5–10 pieces of text that cover the required topics, to use in drafting. Each retrieved snippet may be a few paragraphs that focus on a particular clause or issue.

- Augmenting the Prompt with Retrieved Text: Once relevant material is fetched, the AI augments the original prompt with this information. This means that the content from the retrieved documents is appended to the user’s query, typically in a structured manner. For instance, the augmented prompt to the LLM might look like: “Using the following sources, draft a consultancy agreement…” and then list the key clauses or points from the retrieved precedents, such as: “Clause from Precedent A: [text of standard IP clause]…”, “Clause from Precedent B: [text of confidentiality clause]…”, etc. Essentially, the LLM is being given a cheat sheet of relevant legal text and asked to weave that into the answer. The model doesn’t simply copy and paste them (unless explicitly told to). Instead, it will integrate and adapt them to form a coherent draft. Because the prompt now contains both the user’s instructions and injected legal content from the knowledge base, the model’s task is to synthesise the two: to produce a contract that fits the user’s requirements using the provided reference language as needed.

- Generating the Draft Contract: Finally, the LLM generates the output – the contract draft – based on the augmented prompt. This is where the model’s linguistic prowess and knowledge of legal drafting patterns come into play. It typically begins by writing the contract title, followed by the introductory clause (including recitals or definitions, if applicable), and then each section of the agreement, often in a logical order (such as services, payment, IP rights, confidentiality, termination, etc.). The contract concludes with boilerplate clauses. As it generates, the model draws not only on the general legal drafting skills encoded in its weights (from training) but also on the specific phrasing and facts included in the retrieved text. For example, if the practice note indicated “In consultancy agreements, often the consultant retains ownership of IP but grants the company a license,” the AI will incorporate that concept into the IP clause it drafts. The result is a tailored contract that addresses the user’s scenario, enriched with clauses that mirror established precedents. Essentially, the LLM draws from the augmented prompt and its internal representation of training data to synthesise an answer, i.e. the contract, tailored to the user.

The entire process above might happen in seconds. Importantly, because the answer was grounded in actual documents, the AI can also cite which sources it used for each part of the draft. For instance, the confidentiality clause in the output might come with a note or citation referencing the precedent or practice note that inspired it. This goes back to the transparency advantage of RAG. The user can see the origin of each part of the draft and be confident that it wasn’t hallucinated.

To illustrate concretely: if our user requested a consultancy agreement, the output might start like:

Consultancy Agreement

Date: ___ (to be completed)

Parties: (1) ABC Ltd, registered in England and Wales… and (2) John Doe, a self-employed IT consultant…

1. Services. The Consultant agrees to provide IT consulting services as described in Schedule 1…

2. Fees. The Company shall pay the Consultant… (payment terms) …

3. Intellectual Property. Any intellectual property created by the Consultant in the course of providing the Services shall be owned by the Consultant. The Consultant hereby grants to the Company a perpetual, royalty-free licence to use such intellectual property for the Company’s internal business purposes…

4. Confidentiality. The Consultant shall keep confidential all information… [etc., a standard confidentiality clause] …

5. Term and Termination. …

6. General. … (boilerplate clauses like governing law (England & Wales), entire agreement, etc.) …

Signed by the Parties: …

In the above draft, clauses 3 and 4 (IP and confidentiality) closely reflect the retrieved precedents and practice notes. The model chose those words because they were present in the reference material provided during augmentation, ensuring the language aligns with standard practice. Clause 3, for example, explicitly grants ownership to the consultant but licenses it to the company, a detail it might have pulled straight from a practice note as instructed. The overall structure (clauses 1–6) and other content are derived from the model’s general knowledge of contracts and may also be influenced by having seen similar agreements during training. Notice how the AI maintained a formal tone and structured layout. These are hallmarks of legal drafting that it has learned.

Behind all this, the weights and probabilities in the LLM guided each word choice, which we will examine in the next section. However, the high-level takeaway is that the AI doesn’t blindly create text; it follows a pipeline that combines user intent with retrieved legal knowledge and then utilises its generative capacity to produce a coherent and context-specific draft. This pipeline enables AI systems to draft not just generic text, but also legally meaningful contracts that are responsive to a user’s needs.

Weights, Probabilities, and the Mechanics of Text Generation

It is helpful to understand how the AI decides on the wording of each sentence it drafts, especially from the perspective of a legal professional who might wonder, “Where did that phrasing come from?” The answer lies in the model’s weights and probabilistic nature.

As noted earlier, an LLM is essentially a mathematical model that has been trained to predict the most likely next token given a sequence of prior tokens. The model’s weights, millions or billions of numerical parameters, encode the correlations and patterns learned from its training data. When the AI is drafting a contract, it is effectively performing a probability-guided search through the space of possible sentences. At each step in generating text, the model outputs a list of possible following tokens along with a probability for each. These probabilities reflect how likely each token is given the preceding text and everything the model “knows.”

For example, suppose the model has generated “The Consultant shall” in a confidentiality clause. The next word could be “not”, “keep”, “maintain”, etc. Based on the legal language patterns the model has observed, it might predict that “keep” has, for instance, a 60% probability, “maintain” 30%, and other verbs smaller percentages in that context. “Keep confidential” is a very common phrase, so “keep” would be weighted as the most probable continuation, and the model would choose it. This is why AI outputs often sound so formulaic and familiar. It is because the AI is inclined to select the phrasing that is statistically most common or appropriate for that context. In contracts, those common phrasings tend to be precisely the well-worn legal boilerplate and standard clauses lawyers use (since those appear frequently in the training and reference data).

The model’s weights also encapsulate domain knowledge in a statistical form. For instance, the model might “know” (from training on many contracts) that after the words “governing law”, the token “England” (or “English”) is frequently followed by “and Wales”. When drafting a governing law clause, the model assigns a high probability to “England and Wales” appearing together. Similarly, it might have learned that the word “indemnify” is often followed by “and hold harmless” in specific clauses, and will reproduce that coupling due to the weight patterns.

It’s important to realise that the AI is not consciously applying legal reasoning or checking against an internal rulebook. It is following learned statistical cues. In a practical legal drafting context, this often works surprisingly well: the language of contracts is repetitive and formulaic by nature, so a probabilistic model is very good at getting the form right. It “knows” that contracts start with titles and parties, that definitions are often in capital letters, that key obligations use “shall,” that indemnities and warranties have linguistic structures and so on, because those patterns were common in its training. The weights guide it to follow these patterns without the model ever having to understand why they exist.

Consider the phrase “time is of the essence”. This is a classic contract phrase that indicates that fulfilling an obligation by a specific deadline is a critical part of the contract. If the user’s prompt or retrieved context suggests a need for strict timing, the model’s next-word probabilities might strongly favour completing the idiom once it starts. After generating “time is”, the weight-influenced probabilities make “of” highly likely next, and then “the essence” to follow. Thus, the model correctly outputs the full clause. It’s not because the AI truly grasps the legal effect of that clause, but because statistically, whenever “time is” appears in a contract context in its training data, “of the essence” usually follows.

The probabilistic nature of generation also means the AI can introduce variation or choose alternative wording if probabilities are close or if a bit of randomness (“temperature” setting) is applied. For example, it might say “The Consultant shall maintain the confidentiality of all proprietary information” in one draft and in another equally could say “The Consultant shall keep confidential all proprietary information”. Both essentially mean the same, and both are phrases it has been seen, just a matter of which had slightly higher weight at the time of generation. In legal drafting, however, we usually prefer consistency and the most standard phrasing (to avoid any unintended change in meaning), so such AI systems often operate with settings that select the highest probability (most standard) tokens, yielding conventional language.

One might ask, does this probabilistic approach ever lead to incorrect outcomes in a legal sense? It can. For example, if there are two common patterns in the data, one that’s legally safer and one that’s less so, the model might choose the latter by chance if it’s nearly as common. This is why some AI-generated clauses might sound fine, but upon close inspection by a solicitor, they may not be the optimal choice. The AI might, for instance, omit a specific qualifier or include an extra condition simply because in half the precedents it saw that was done, and in the other half, not, so it guessed one way. The model does not truly understand the implications; it relies on frequency and context correlation.

To put it metaphorically, an LLM drafting text is like a very sophisticated auto-complete – given the start of a sentence, it completes it in the most likely way. By doing so for an entire contract, it effectively auto-completes the contract clause by clause, guided by what it has observed in thousands of other contracts. The weights in the model ensure that legally savvy completions (which were prevalent in training data) have a high probability. This makes the AI a fast drafter that almost intuitively “knows” how to phrase things.

For a legal professional, this mechanism has two key implications. First, it explains why AI drafts often look impressively good: they are stitched together from the fabric of countless real legal documents, via probabilities that favour tried-and-true language. Second, it highlights why human review is still crucial: the AI might string together clauses that individually sound fine but collectively have inconsistencies, or might choose a less common variant of wording that subtly changes the meaning. Since it lacks true understanding, it won’t reliably catch those issues on its own. We turn next to the specific limitations that can arise from these dynamics, despite this powerful technology.

Limitations of AI Contract Drafting

Even as AI models and retrieval techniques have improved dramatically, AI-generated contracts still have important limitations. As of mid-2025, any practitioner using such tools should be aware of these issues:

- Hallucinations (Fabricated Content): AI models can sometimes “make up” information that was not in the prompt or the knowledge base. This could be as harmless as inventing a generic company name, for example, or as dangerous as fabricating a legal clause or citation. For instance, without proper grounding, an AI might confidently insert a clause citing a law or regulation that does not exist or reference a case with a convincing name and case reference number that is entirely fictitious. There was a notable incident in which an AI tool provided a lawyer with several case citations that were later found to be non-existent. This apparent hallucination went unnoticed until a judge flagged it. RAG helps mitigate this by forcing the model to stick to real documents, but hallucinations can still occur if the model misinterprets documents or if the prompt isn’t well-managed. In a contract draft, a hallucination might be something like an obligation that has no basis in law or an incorrect statement (e.g., “Pursuant to GDPR Article 45, parties must do X” – when Article 45 says no such thing). These mistakes are not intentional falsehoods by the AI; they are a byproduct of the model’s attempt to predict a plausible answer. Nonetheless, they can be perilous in a legal document.

- Context Limitations: While modern models like GPT-4 have large context windows (able to consider tens of pages of text at a time), there are still practical limits on how much information can be processed in one go. If a user prompt and the retrieved materials together exceed the model’s context window, some information will be omitted, potentially leading to loss of context. This means the AI might “forget” or ignore some earlier parts of the draft as it writes later sections. In a long contract, if not handled carefully, details mentioned in one clause might not be consistently reflected in another clause because the model can’t look back far enough. Additionally, the model doesn’t truly memorise what it wrote earlier in the draft beyond the context window, so cross-references (“as stated in Clause 5 above”) or maintaining consistency of terms can be a challenge if the document is very lengthy. There’s also the issue of session context in an interactive setting: if a user asks the AI to modify a clause in a second prompt, the AI only knows what’s in the conversation history (or what’s re-provided to it). Important context may be dropped unless explicitly included in the prompt. In summary, although the capacity is large, it’s not infinite, and the AI may lose track of details in very complex or lengthy drafting tasks.

- Interpretive Ambiguity and Misunderstanding: AI models lack genuine understanding of language; they only have statistical correlation knowledge. This can lead to misinterpretation of ambiguous inputs or sources. A dramatic example was highlighted by MIT Technology Review: an AI read the title of an academic article “Barack Hussein Obama: America’s First Muslim President?” and then stated as a factual answer that “The United States has had one Muslim president, Barack Hussein Obama,” clearly failing to grasp that the article title was a rhetorical question and not a statement of fact. The AI saw words and regurgitated them out of context. In contract drafting, this sort of issue might translate to the AI misinterpreting a user’s request or a precedent. For example, if a practice note says “Do not include X in such contracts,” there is a risk the AI might overlook the negation and erroneously include X, especially if the phrasing is subtle or the model gives more weight to another part of the text. Similarly, the AI might not fully grasp nuances, such as the distinction between “best efforts” and “reasonable efforts” obligations. If both phrases appear in training data, it might treat them as interchangeable unless clearly instructed otherwise, potentially causing ambiguity or an unintended promise in the draft. Sarcasm, questions, or uncommon phrasing can trip up the model’s literal word-matching tendencies, as seen in the Obama example. Legal texts are usually straightforward, but if the AI misinterprets the context or intent behind the provided language, the output could be inappropriate. For example, a practice note might describe a clause to criticise it, but the AI might take that clause and use it, thinking it’s recommended.

- Lack of True Legal Reasoning: Although not a single technical “bug,” this is a fundamental limitation: current AI does not reason or truly understand legal principles. It cannot determine whether a contract adequately protects a client’s interests or if a clause has all the necessary elements. It can only make an educated guess based on patterns. For example, if tasked with drafting a novel clause that combines unfamiliar concepts, the AI might produce something that appears plausible but whose legal effect is uncertain or nonsensical upon close analysis. The AI also doesn’t know the external world or the specific business context unless it is informed, so it might draft terms that are legally sound but commercially unworkable, or vice versa. In June 2025, no AI can reliably ensure that a contract is “fair” or “enforceable” in every scenario. It can only emulate forms and styles that are typically used.

- Sensitivity to Prompt Quality: The AI’s output is highly dependent on how the task is described. A slight ambiguity or omission in the user’s prompt could result in a draft that overlooks key elements. For instance, if the user doesn’t explicitly mention a governing law, the AI might default to a common choice (like English law, if that’s prevalent in its data), which might be fine or might be wrong for the context. If the user requests a “simple” agreement, the definition of “simple” is open to interpretation: the AI might exclude specific clauses to make it shorter, possibly omitting something important. Essentially, the AI is not proactive; it won’t add clauses that the prompt or retrieved sources didn’t hint at, even if a human lawyer would think of them. This means the onus is on the user to provide comprehensive prompts, and even then, the AI might not fully grasp a vague instruction, leading to omissions or irrelevant inclusions.

- Formatting and Execution Details: Sometimes AI outputs require editing for format or specifics. It may insert placeholders (such as “[Company Name]”) that the user needs to fill in. This is usually fine and expected. However, it may also lack the ability to format complex schedules, tables, or formulas in a contract, as these may be rare in its training data. As of 2025, models still occasionally produce inconsistent numbering of clauses or stray from a template style partway through a document, especially if the prompt-provided examples had such issues.

To be clear, many of these limitations are actively being researched and addressed. The use of RAG alleviates some of these issues. For example, hallucination is less likely when the AI has real text to refer to, and ambiguity is reduced when instructions are precise and sources are authoritative. OpenAI’s own evaluations of GPT-4, for instance, noted significant improvements in factual accuracy and following instructions compared to prior models, though they acknowledge it is far from perfect. Similarly, we expect future models to handle longer contexts and provide a more nuanced understanding. But as of mid-2025, an AI draft is not a finished product. It is a very advanced first draft that can save a lawyer considerable time; however, it will need to be reviewed and refined by a human, which brings us to the final, crucial point: the role of the human lawyer in AI-assisted drafting.

Why Expert Human Review Remains Essential

No matter how sophisticated the AI, in legal drafting, the ultimate responsibility lies with the human lawyer. There are several reasons why expert human review is, and will remain, indispensable when using AI to draft contracts:

- Ensuring Legal Accuracy and Suitability: A specialist solicitor has the training and experience to spot if a clause is legally inadequate or risky. AI might draft a clause that is grammatically perfect and superficially on point. Yet, a human lawyer might notice it fails to address a certain scenario or doesn’t align with the client’s specific interests. For example, the AI might propose an indemnity clause that seems standard, but a solicitor could realise it doesn’t carve out certain liabilities that in this deal should be excluded. Only a human, exercising legal judgment, can truly assess if the contract does what the parties intend and is enforceable under the relevant law. The AI cannot reliably make those judgements. It doesn’t understand fairness, risk allocation, or the business goals behind the contract. As one illustration, the AI could easily miss a critical exception or add an unnecessary provision simply because those issues didn’t come up clearly in the prompt or were balanced differently in its training examples. A human lawyer is needed to correct such issues.

- Contextual and Commercial Insight: A solicitor understands the broader context, including the parties’ relationship, industry norms, and negotiating positions, and can tailor the contract accordingly. AI won’t know, for instance, that a particular client is very risk-averse about IP and thus would want extra robust IP warranties beyond the norm, unless explicitly instructed. Nor will the AI grasp which points are likely to be contentious in negotiations. The human drafter, knowing what typically triggers client or counterparty concerns, can adjust the AI’s draft (or the prompt before generation) to address those nuances. Essentially, the solicitor provides the strategic brain that the AI lacks. The AI is a tool for generating text, but determining what text is appropriate in light of the deal’s context is a human role.

- Verification of Facts and Sources: As we discussed, AI can hallucinate or misquote. A diligent solicitor must verify that any laws, regulations, or case references in the draft are accurate and up to date. If the AI cites a precedent or practice note (as a RAG-based system might), the solicitor should read those sources to ensure the AI interpreted them correctly. The presence of citations in the AI’s output makes this easier, as one can directly check the sources. However, the responsibility lies with humans to do so. This is akin to a junior lawyer drafting a clause and a senior lawyer double-checking the legal citations and reasoning. The AI is like a tireless junior. It will produce a draft at lightning speed, but it might also bluff confidently where it’s unsure. The senior (the human lawyer) must catch any such bluffs. For example, if the AI included, say, a reference to “Data Protection Act 2022”, the solicitor should recognise there is no UK Act by that exact name in 2022 (the AI perhaps hallucinated the year) and correct it to “Data Protection Act 2018” or the UK GDPR as appropriate.

- Interpreting Ambiguity and Client Intent: If something in the AI’s draft is unclear or could be interpreted in multiple ways, a human needs to refine the language. AI might draft an ambiguous clause because it averaged several examples or because the prompt was ambiguous. The solicitor can spot these and rephrase for clarity. Moreover, only a human can communicate with the client to clarify intent. If the AI draft raises a question, such as, “Did you want termination for convenience or not?” because it wasn’t specified, the lawyer must resolve that and then adjust the draft accordingly. The AI can’t have that real-world dialogue or make judgment calls; it will either include something by assumption or leave it out, but the human must ensure the final contract aligns with the parties’ actual intent.

- Ethical and Professional Accountability: Under English law (and indeed in most jurisdictions), a contract drafted for a client ultimately falls under the responsibility of the legal professional advising on it. If an error in the contract leads to a dispute or loss, the liability could fall on the firm or solicitor. Using AI does not remove that responsibility. The Solicitors Regulation Authority (SRA) would expect a solicitor to exercise appropriate oversight when using any technology, ensuring that competence and diligence are maintained. The AI is not, at least not yet, a regulated legal advisor. It cannot sign off on a contract or take legal responsibility for it. Therefore, a solicitor must review and sign off on the AI’s work just as they would review a junior lawyer’s draft. This includes not only checking for correctness but also tailoring the draft to the client’s circumstances in ways an AI wouldn’t know to do. It’s worth noting that even OpenAI has highlighted that their models can produce incorrect or biased outputs and should be used with caution in high-stakes matters. Lawyers must incorporate such guidance into their usage, essentially treating the AI’s draft as a helpful first version that expedites the process, but not as the final authority.

- Synergy of AI and Human Strengths: The ideal workflow is one where the AI and the solicitor complement each other. The AI excels at rapidly generating a structurally sound draft with the boilerplate and standard terms in place (saving the solicitor hours of typing and re-typing). It can also suggest language for unusual provisions by drawing on its vast training data, sometimes even pointing the human to helpful precedent ideas they might not have recalled. The human, on the other hand, excels at critical thinking, understanding nuance, and making value judgments. A specialist solicitor will review the AI’s draft and apply their expertise to adjust wording, add or remove provisions, and ensure everything makes sense legally and commercially. In areas where the AI’s output seems off, the solicitor can either fix it manually or even query the AI further (e.g., “Rewrite clause 5 to protect the client’s IP better, given they own all project results”), effectively using the AI iteratively under guidance. In this way, the AI becomes a powerful assistant, handling the heavy lifting of initial drafting and repetitive tasks, while the solicitor provides oversight, direction, and final polish. This human-in-the-loop approach is widely recommended as a best practice when deploying AI in legal work, as it leverages the strengths of both: the AI’s speed and breadth of knowledge, and the human’s depth of understanding and accountability.

AI has not replaced the need for human lawyers in contract drafting. It has augmented their capabilities. A tool like the one developed by British Contracts can dramatically reduce the time spent on mundane tasks (such as formatting agreements or recalling standard clause language) and enable solicitors to focus more on high-level analysis and client-specific tailoring. However, the solicitor’s role remains crucial in verifying accuracy, injecting real understanding, and making judgment calls that no machine can currently replicate. Much like the advent of spell-checkers didn’t eliminate the need for careful proofreading, AI drafters don’t eliminate the need for careful legal review. They catch the low-hanging fruit and leave the sophisticated reasoning and final decisions to the human expert.

Conclusion

AI drafting technology, powered by large language models and retrieval-augmented generation, represents a significant leap forward in the field of legal document production. An AI like GPT-4 can parse a user’s instructions, fetch relevant legal materials from a vast knowledge base, and produce a well-structured contract draft in a matter of seconds; a task that might take a human many hours. The underlying mechanisms, from tokenisation and context windows to embeddings and vector searches, work in harmony to make this possible. The AI leverages its training (billions of parameters encoding the patterns of language) to ensure the output is fluent and stylistically correct, and it leans on the curated knowledge base to ensure the content is factually grounded in real, up-to-date legal text. This combination of neural text generation and authoritative source material enables AI systems to draft documents that are not merely boilerplate copies but are dynamically tailored to the prompt’s requirements, often with impressive coherence and a legal-sounding precision.

However, as we have detailed, these systems are not infallible. The current state of AI in mid-2025 still struggles with achieving a proper understanding, factual reliability, and effective context management over very long documents. Issues like hallucinations or subtle misinterpretations can and do arise. Therefore, in practice, AI drafting tools are best viewed as assistive technology for legal professionals, much like a very advanced form of document automation or a supercharged junior associate who never tires. They can dramatically improve efficiency, consistency, and even access to legal drafting for those who might not afford extensive legal help by providing a strong starting template. But they do not eliminate the need for human expertise and oversight. A specialist solicitor must review each AI-generated contract to ensure it meets the client’s needs, is legally sound, and reflects the nuances of the situation.

In the context of English law, where the precision of language can determine court outcomes, this point cannot be overstated: an AI can draft a contract, but only a qualified human lawyer can approve it. The partnership of AI and solicitor can be incredibly powerful, the AI handling routine drafting and the human focusing on strategic inputs and quality control, and this is likely the model for the foreseeable future of AI in the legal profession. British Contracts’ approach, incorporating RAG and LLMs, exemplifies how cutting-edge technology can be harnessed in a legal knowledge base to assist with contract drafting, while also demonstrating why it is deployed in a way that keeps lawyers informed.

To wrap up, imagine the near future of contract drafting as a highly collaborative process: the solicitor defines the objectives and key terms, the AI produces a comprehensive first draft in moments, grounded in the collective wisdom of thousands of precedents, and then the solicitor methodically reviews and fine-tunes that draft to perfection. The outcome is a well-crafted contract produced in a fraction of the time it once took, with technology handling the routine drafting and humans ensuring excellence and accountability. AI drafting with RAG and LLMs is a game-changer. Not because it replaces lawyers, but because it empowers them to work smarter and focus on what truly requires human judgment and expertise. AI represents an evolution in legal practice, combining the consistency and speed of machines with the insight and oversight of experienced solicitors to better serve clients in creating sound legal agreements.