What Are “Embeddings” in Plain English?

In the context of AI and language, an embedding is essentially a numerical representation of a piece of text, like a fingerprint or coordinates, that captures the text’s meaning. When you input some text (a word, sentence, or clause) into an embedding model, it outputs a list of numbers (a vector) that corresponds to that text. This isn’t random. The model is trained so that texts with similar meanings get similar vectors. In other words, the embedding is a point in a high-dimensional space that encodes the essence of the text. For example, the sentence “The contractor shall maintain confidentiality” might be transformed into a vector of hundreds of numbers. Another sentence, such as “The freelancer must keep information secret,” would produce a vector numerically close to the first one, reflecting that the two sentences convey roughly the same meaning, even though the wording is different. By contrast, a sentence about an unrelated topic (e.g. “The tenant will paint the walls”) would have a very different vector.

How does raw text become a vector? Modern AI models, often neural networks, are trained on large bodies of text so that they learn to associate words and contexts with numerical patterns. When such a model is used as an embedding model, it processes the input text through many layers of mathematical transformations and outputs a series of numbers. Each number is like a “feature” capturing some aspect of meaning. The result is a dense vector (dense meaning most numbers are non-zero), often with a few hundred dimensions. This vector is the embedding. The system converts the user’s query and all searchable content into vectors, which are dimensional, numeric representations that capture the semantic meaning of the text. The key point is that these vectors aren’t arbitrary; they are arranged in a way that reflects the content’s meaning. So, think of an embedding as a distillation of text into numbers that a computer can easily compare with other text.

Capturing Semantic Meaning

Embeddings are powerful because they capture concepts and context, not just literal words. Two pieces of text that express the same idea will have embeddings that are mathematically close, even if they don’t share many keywords. Embeddings encapsulate the semantic meaning of words and phrases by representing them as points in a high-dimensional vector space. Words with similar meanings are mapped to nearby points in this space. In practical terms, this means an embedding can recognise synonyms or related phrases. For example, “terminate the agreement” and “end the contract” would likely yield nearby vectors. The model has learned that terminate and end, agreement and contract are used in similar contexts.

This semantic clustering isn’t limited to single words; it also works for entire sentences and paragraphs. An AI system might place an employment contract termination clause and a redundancy clause close together in vector space if their content is conceptually related (both deal with ending employment arrangements). Because embeddings encode context, even a pronoun or reference can be understood in situ. For instance, an embedding model would know that in the clause “the Contractor shall return all materials,” the word “materials” in the context of a contract likely refers to documents or work products, an insight reflected in the numerical embedding it produces. Essentially, the embedding space is organised by ideas and meaning. Texts about intellectual property will reside in one region of this space, texts about payment terms in another, and so on. This property allows AI to measure how similar two pieces of text are in meaning by measuring the distance between their vectors (often using cosine similarity).

Traditional Keyword Search vs. Semantic Search with Embeddings

It’s helpful to compare how a traditional legal search works versus an embedding-based semantic search. Traditional keyword search has been our go-to method for years. You type in a word or phrase, and the system tries to find documents containing those exact terms (or a close variant). It’s a literal matching approach. If you search for “indemnity clause”, you’ll only get documents where “indemnity” appears. This can be fast and precise when you know the exact terminology or clause name to look for. However, legal language is rich with synonyms and varying phrasing. A lawyer might refer to an “IP ownership clause” in conversation, but the precedent library might label it “Intellectual Property Rights – Ownership”. A keyword search for “IP ownership” might miss a clause that is worded as “Intellectual Property Rights” because the exact keyword “ownership” isn’t there.

Semantic search addresses this issue by considering meaning, rather than just exact words. The AI performs a search by converting the query and documents into embeddings and finding which vectors are closest. The result is that it can find relevant material even if the words differ in meaning. As OpenAI’s documentation explains, “unlike keyword search, which looks for exact word matches, semantic search finds conceptually similar content — even if the exact terms don’t match”. For example, if you search a contracts database for “duty of confidentiality for consultants”, a semantic search could retrieve a clause titled “Non-disclosure by Contractors” because the idea of confidentiality by a consultant is semantically the same as a non-disclosure obligation on a contractor, even though the phrasing is different. The underlying embeddings for those texts would be close, signalling a match in meaning.

From a user’s perspective, this means you can ask questions or describe what you need in natural language, and the AI can still find what you’re after. One legal tech commentator noted that embeddings allow documents to be found “based on semantic similarity to a search term, rather than the existence of [that] term as a keyword… This solves the pain point around ‘having to type the right thing into the search box’”. In other words, you don’t need to guess the exact wording used in a precedent. This is extremely useful in law, where the same concept can be expressed in myriad ways. A traditional search might fail if you use a different term than the one in the document; an embedding-based search will infer the similarity.

It’s worth noting that keyword search isn’t completely obsolete. It can still be handy if you know the precise term you want to find (for example, you might specifically want clauses where the term “governing law” appears). Many systems offer both methods side by side. Semantic search offers a more intuitive approach to searching, particularly for exploratory queries (“find me something like X…”). Embeddings enable much more context-aware results as opposed to “keyword-matching” alone. The upshot is that lawyers can search in a more natural, conceptual way, a bit like asking a well-informed colleague, rather than having to think of the exact keywords that might appear in a document.

How British Contracts’ AI Uses Embeddings for Legal Documents

British Contracts’ AI-assisted drafting tool utilises embeddings to enhance contract drafting efficiency and accuracy. Our system features a comprehensive knowledge base of legal texts, including precedent clauses, entire template contracts, and practice notes (guidance documents on legal drafting and key concepts). Each of these pieces of text has been processed by an embedding model to create its vector representation. Imagine a database table where one column is the clause or document text, and another column is a long list of numbers (the embedding). This is often referred to as a vector database or vector index. When you, as a user, pose a query or need a certain clause, the AI does the following:

- Your query is embedded: The plain-language query you input (e.g. “I need a clause about IP ownership for a freelance designer”) is fed into the same embedding model, generating a vector for your query. This vector now quantifies the meaning of your request. In our example, the model will capture that your query involves intellectual property rights and freelance designers (independent contractors), as well as the distinction between ownership and licensing of IP.

- Semantic matching in the vector database: The AI system then compares your query’s embedding to the embeddings of all the content in the knowledge base. This is essentially a similarity search in a high-dimensional space. It finds which stored vectors are closest to the query vector. Closeness (measured by cosine similarity or another distance metric) indicates that the content is likely about the same topic or concept as your query. In our example, the system might find that an “Intellectual Property Ownership” clause from a Consultancy Agreement has an embedding very close to your query embedding. It might also find a relevant practice note explaining IP ownership in contract work, because that practice note’s embedding is also near your query vector. The result is a set of semantically relevant texts, even if none of them contain the exact phrase “freelance designer”, they might say “consultant” or “independent contractor” instead, which the AI knows is related.

- Retrieving the top matches: The system ranks the content by similarity score and retrieves the top results, such as the best few clauses and notes, that relate to IP ownership for independent contractors. This all happens in a split second. As the user, you might then see suggested clauses or summaries drawn from these top-ranked pieces of content.

Through embeddings, British Contracts’ AI is effectively doing what a skilled lawyer or knowledge manager might do: recognising that a question about “IP ownership in a freelance designer context” is answered by looking at precedents for intellectual property clauses in contractor agreements. It bridges the gap between the language you used in your query and the language used in formal legal documents. This semantic approach enables records to be found based on semantic similarity, rather than the existence of a search term as a keyword, which significantly improves the process of identifying the right starting points. In a practical scenario, this means an in-house lawyer can type a request in plain English and the AI will retrieve the most relevant contract clauses or guidance notes, even if the lawyer didn’t use the exact technical terms. This capability is extremely helpful when drafting, because lawyers often know what they want to achieve (the outcome or concept) but not necessarily whether the repository has it phrased in a particular way.

It’s also important to note that the British Contracts system is aligned with English law and practice. The embeddings were generated using models trained on legal language, specifically on UK legal terminology. This ensures that, for instance, a query about “restrictive covenants for employees” will bring up content about post-termination non-compete and non-solicitation clauses relevant to English employment contracts, rather than irrelevant or US-centric results. By using practice notes and precedents grounded in English law, the AI’s recommendations stay context-appropriate. In essence, the embeddings help the AI not only find semantically relevant text but also domain-relevant text (materials drawn from the correct jurisdiction or practice area).

As a safeguard, if you do know exactly what you’re looking for, say a clause containing a specific phrase or obligation, you can still conduct a targeted keyword search. British Contracts integrates both approaches, allowing users to combine semantic power with precision filtering (for example, first use embeddings to generate candidates, then filter by document type or a specific term). The goal is to make finding the correct clause or form much quicker than hunting through folders or asking colleagues, and embeddings are the key technology that enables this semantic match.

Embeddings in the RAG Workflow: Retrieving and Drafting

British Contracts’ AI drafting tool doesn’t just find relevant text. It also uses it to help draft new contract language. This approach is known as Retrieval-Augmented Generation (RAG). In a RAG system, the AI combines a search step with a text generation step. Embeddings play a central role in the retrieval part of this pipeline. To understand how it all fits together, let’s break down the workflow.

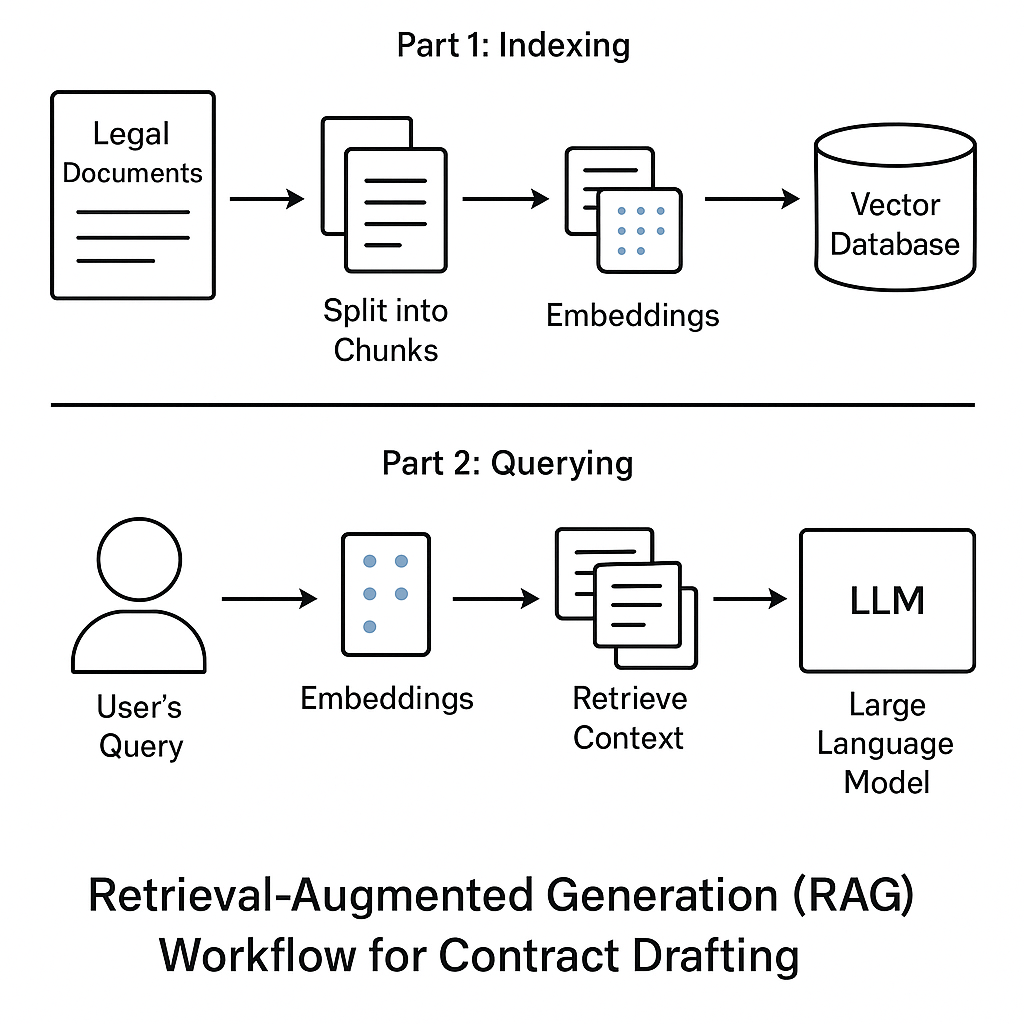

Figure: Retrieval-Augmented Generation (RAG) workflow for contract drafting. In Part 1: Indexing, legal documents (precedents, clauses, notes) are split into chunks and transformed into embeddings (vector representations). These vectors are stored in a vector database (an index for semantic search). In Part 2: Querying, a user’s query or prompt is also converted into an embedding using the same model, and the vector database is searched for similar embeddings. The most relevant chunks of text are retrieved as context. The Large Language Model (LLM), here, a GPT-based model, then receives a prompt consisting of the user’s request augmented with the retrieved context, and uses this to generate a tailored answer or draft clause.

In simpler terms, the RAG process does the following:

- Offline (preprocessing/indexing): All reference materials (contracts, clause bank, practice notes) are ingested into the system. Long documents are broken into smaller paragraphs or clauses for finer search granularity. Each piece is run through an embedding model to produce a vector. The system stores these vectors in a special database optimised for similarity search (a vector DB). Think of this as loading up a smart index with all your knowledge pieces, where concepts rather than keywords organise the index.

- Online (query time retrieval): When you pose a query or drafting instruction, the system immediately embeds your query into a vector. It then looks this vector up in the index to find which stored vectors are closest, in effect, finding which pieces of stored text are semantically closest to your request. Those top relevant pieces (say, the 3–5 most relevant passages) are pulled out as retrieved context. In our running example, the system might retrieve a standard “Intellectual Property Ownership” clause and a snippet from a practice note about IP in freelancer agreements.

- Generation with context: Now the AI (the large language model component) is prompted with your original query plus the retrieved texts as reference material. For instance, the prompt to the model may be: “User asks: ‘Draft an IP ownership clause for a freelance designer contract.’ Here are relevant sources: [clause text snippet]; [practice note snippet]… Please draft a clause using these as guidance.” Because the model has those relevant details on hand, it can generate a clause that is both contextually accurate and tailored to the query. This is the “augmented generation” part; the model’s output is grounded in real, retrieved legal language, not just its own training data.

By using RAG, British Contracts’ AI ensures that the drafting assistance it provides is up-to-date and reliable. The embeddings-driven retrieval brings in facts and language from the knowledge base (for example, the latest standard clauses vetted by the legal team), and the GPT-based generator then weaves that information into a cohesive draft. OpenAI’s documentation describes this flow: chunks of documents are embedded and stored; when a question is asked, the question is embedded, and “semantically similar chunks” are retrieved; those chunks are then “included as context in the prompt to generate a more informed answer”. In a legal drafting scenario, that means the model isn’t writing in a vacuum. It is looking at actual precedent text as it drafts, which significantly reduces the chance of errors or imaginative “hallucinations” that wouldn’t pass legal muster.

To illustrate, let’s return to the example query about an IP ownership clause for a freelance designer. Using RAG, the AI might retrieve an existing clause that says: “All intellectual property created by the Consultant in the course of the Services shall belong to the Company”. It might also retrieve a note explaining typical IP ownership provisions in freelance contracts. With these in hand, the language model can draft a new clause along the lines of: “The Contractor agrees that any intellectual property created in the course of performing the Services will be owned by the Client. The Contractor will execute any documents necessary to transfer such rights to the Client.” This output clause reflects the substance of the retrieved examples, adapted to the user’s specific scenario (using terms like “Contractor” and “Client” consistent with that context). The heavy lifting in finding the right content was done by embeddings; the finesse in phrasing a new clause is done by the generation model.

Why Does This Matter for Lawyers?

For legal professionals, the combination of embeddings and RAG is a game-changer in contract drafting workflows. It means that an AI assistant can truly understand a request in your own words and fetch the relevant legal knowledge to address it. No more combing through dozens of documents hoping to find that one clause that fits your needs; the AI does that for you in seconds, based on meaning. This accelerates the drafting process, helping lawyers focus on reviewing and tailoring content rather than locating it. It’s not hard to see the benefit: imagine you need to draft a liability cap clause for a new agreement. You could search your organisation’s precedent library manually, or you could ask the AI assistant. If you ask the AI, “Give me a limitation of liability clause for a software supply contract, under English law, liability capped at £X, excluding indirect losses,” the system will use embeddings to pull up the closest matches (perhaps a few clauses from past software contracts and a practice note on liability clauses). Using those, it will produce a draft clause for you. You, of course, will then refine and confirm the clause, but the first draft appears in moments, grounded in precedent language.

It is important to stress that human expertise remains crucial. The AI can provide a huge productivity boost by finding the right information and drafting an initial version, but a qualified lawyer should always review the output. The AI might not fully grasp nuances, such as whether a precedent clause is “toxic” or not ideal (something lawyers would know from experience). Any AI-drafted clause or contract should be reviewed and checked by a qualified lawyer before use. In practice, British Contracts’ tool is there to assist, not replace, the lawyer, much like an intelligent paralegal who can instantly gather materials and propose language, while the lawyer provides oversight and final judgment.

Embeddings allow AI systems like ours at British Contracts to work with legal language in a meaningful way. By transforming text into vectors that capture legal semantics, the AI can bridge the gap between a lawyer’s natural query and the formal texts in a legal database. Combined with a retrieval-augmented generation approach, this enables the drafting assistant to produce accurate, relevant, and context-specific contract clauses on demand. For lawyers and contract managers, this technology translates to faster drafting, more comprehensive use of available knowledge (since the AI can search widely and deeply), and greater confidence that no relevant precedent is overlooked due to quirky wording. It brings us a step closer to the holy grail of contract drafting: never starting from a blank page, and always leveraging the collective knowledge embedded in past contracts and guidelines. The magic behind that, as we’ve seen, lies in those hundreds of numbers that represent our words, embeddings, quietly doing the heavy lifting in the background.